Intro

Guest post from Barry Green, Interim Chief Data Officer at Allianz Partners.

In March 2019 our CDO Hub event topic was Tools and Technologies, with the discussion led by our friend and (one of the first!) CDO Hub member, Barry Green.

At the event Barry ran through the types of tooling functionality needed to implement a data management framework. This covered functional groupings and different implementation approaches. A broad framework was presented to provide context for the tooling functionality.

And he also put this together for us to share with you…

Data Management Tooling

1.Background

The use of data and information for competitive advantage requires that an organisation implement holistic data management capability. This capability is developed using the data management framework. The framework supports both data and information management. In addition, it should assist with identifying operational effectiveness, innovation and rapid change.

The three core components for success are:

- Culture Change: Cultural change happens over time through increased understanding and management of data and process. This needs collaboration, communication and education, backed by strong leadership support. By breaking down organisational silos, data supports a customer or product driven approach. This will require organisational change over time.

- Consistency: A consistent approach and way of working is provided by implementing the data management framework. Supporting tooling is used to ensure capture and reuse of institutional knowledge (business and technical metadata). This also provides support for ongoing education and engagement;

- Discovery: Through discovery, definition, understanding context, tagging and securing data, information is improved. The framework uses tools to store and reuse institutional knowledge. Process understanding drives efficiency and improved customer experience.

When combined these components increase the ability of an organisation to change more rapidly. The objective is rapid change, which is started from a base of knowledge captured when implementing the framework.

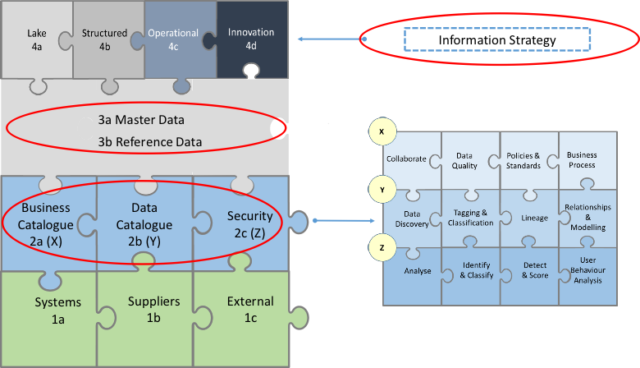

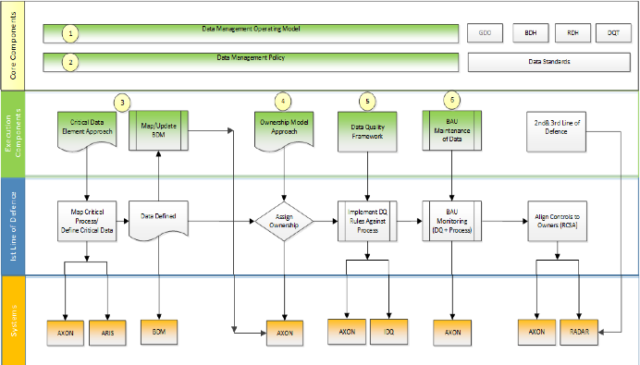

The tooling blocks supporting data management are defined and briefly explained in this blog. The relationship of these tools with the organisations existing systems and external data sources is shown below in Figure 1.

Figure 1 should be read from bottom to top. The layers one to four, highlight the dependencies when implementing tools supporting the framework. The highlighted areas represent key components – that are often missing in an organisations approach to managing data and information.

1.1 Layer One

Data is held in various internal and external systems, provided by multiple parties e.g. customers and suppliers. This foundational data needs to be managed and understood. This is represented in Figure 1 by 1a, 1b and 1c.

1.2 Layer Two

The second layer in Figure 1 (2a, 2b and 2c) is where data is understood and managed. This has three distinct components, a business, metadata and security view. These component parts are expanded to the right with four key pieces which define key functionality.

1.3 Layer Three

The third layer (3a) is not a pure dependency for the provision of information. However, 3b is needed to ensure that the right structures exist to manage the business and report on the organisation. When reference data is standardised and harmonised, information provision is improved by more timely, accurate and valid reporting.

When implemented 3a Master Data Management (‘MDM’) may be used to feed key reporting source platforms. This is one of the key decisions that will need to be made. It is important to recognise that there is a large integration component.

1.4 Layer Four

The information layer (4a,4b, 4c and 4d) is usually present in some form. The information strategy is undertaken to drive consistency and an understanding of how tools, data and structures interact to provide information to a varied user base. This brings together the business, data and architecture strategies.

2.Tooling

The next section provides a brief description of each layer. It is important to use a list of functional requirements to drive tooling choices. The functional list is a basis for understanding and assisting with the architecture roadmap. By doing a gap analysis of the “as is” state you can better prioritise what is implemented first in support of organisational priorities.

Consideration should also be given to how you decommission end user computing solutions and bespoke department tools. The objective is to bring together all contextually relevant information so it can be shared across the organisation. This assists in building the organisational window through collaboration and understanding.

It is also important to understand that some of the functionality is common. As such, your functional listing needs to be a single list for all tools described.

2.1 Systems (1a to 1c)

Information is provided through raw data acquired via the organisations systems, with enrichment adding new data points. Infrastructure can be mixed, so there is often complexity in obtaining the right data.

The assumption is there are legacy systems with core issues, some examples being:

- no product codes;

- limited fields for development;

- limits to customisation (i.e. field length);

- written in old programming languages with knowledge not documented;

- Poorly documented configuration.

Supplier data, with some issues, some examples being:

- Poor quality;

- No definitions or standards defined and applied;

- Incomplete records, i.e. minimum data requirements not met.

Potentially external data sources being semi or unstructured data. This data is assumed to be:

- Not tagged or tagged inconsistently;

- Collected and largely unused;

- Used by data scientists with minimal correction at source where cleaning and tagging is undertaken. This work is then duplicated multiple times.

By not having an approach to managing and understanding data, this causes issues both operationally and strategically. Key known issues are:

- Large number of reconciliations undertaken on an intra-month and monthly basis;

- Regular data cleaning exercises undertaken on an intra month and monthly basis;

- Inconsistent reporting;

- Data misused in analysis.

In order to manage data and provide more robust information, there is a need to manage data and process.

This is not done purely to have better data. The objective is the identification of new revenue opportunities, cost efficiencies and better customer service. Better data simply supports these objectives.

Implementing the data management framework provides structure, consistency and reuse of internal knowledge. The framework has six core components:

- Operating model;

- Policy and standards;

- Mapping critical business processes and defining its critical data. In addition, map data to a conceptual model (physical attributes linked via tooling);

- Assign ownership to critical process and data;

- Apply data quality across a process;

- Ongoing validation of process and data to ensure it is “fit for purpose” and documentation is current.

2.2 Data Management Tools

The key functional blocks are briefly defined below. In addition to a detailed functional list the following should also be considered…

- if the functionality has some dependency before it can be delivered;

- if technical integration is needed which requires IT resources;

- if there is an expense overhead for continuous operation, and;

- if an organisation change may be required for the capability to be sustainable and mature.

2.2.1 Data Management Aggregation

The business catalogue is in effect an aggregator of information providing context to the organisation with a combination of technical and business Metadata.

The tool is effectively the “window” into the organisation i.e. how it operates both internally and externally. Over time this supports the ability to manage agile and rapid change.

2.2.2 Data Management 2a (X)

Data Management is the core tooling and provides somewhere to aggregate both key business and technical metadata via semi-automated discovery using machine learning. While data quality is a key component the tooling is separate. The business catalogue provides the ability to record and manage data and process. It also supports information delivery by providing additional context. Effectively this provides a “window” for the organisation on what is done and how it is executed. It’s important to note that having a defined approach for data including ownership is an essential requirement before tooling is implemented. Tooling is an enabler only.

2.2.3 Data Quality 2a (X)

Data quality tools are needed to look at data quality in sources through the lens of a process, in reference data tools, and also against master data records. In addition, they can be used to provide validation for machine learning matches provided by the other tooling. Data quality tools do not fix data but provide a key role in:

- Identifying data conflicts;

- Identifying data deficiencies;

- Providing quality scores using rules against thresholds to allow for judgement on material data quality issues;

- Understand the overall quality of data sets for use with analytical models (i.e. not all data needs high thresholds to be used effectively);

- Use external validation sources i.e. to validate customer and supplier data or other key domains;

- Ensure data consumed can be given a quality stamp. This allows the consumer to understand if it can be used to support decision making i.e. limitations of the information provided.

2.2.4 Data Catalogue 2b (Y)

It’s essential to identify and integrate a variety of information types, understand what they mean and how they work in the landscape of an organisation. This supports both cost efficiency, compliance with regulation and digital transformation. Figure 1 Tooling Building Blocks highlights capability that an organisation needs to understand and manage data and information. The data catalogue is the more technical part of the core data management tooling.

2.2.5 Data Security 2c (Z)

Data security is a specific type of service using much of the core functionality as defined by 2.2.4. The outcome however is highly specialised and designed to ensure that the data and information landscape can be protected and meet any external compliance requirements.

2.2.6 Reference & Master Data Management (3a & 3b)

Reference data is essential for the development of robust, timely and valid information. Good published reporting needs agreed structures and values for which to produce consistent and understandable information. Any published external and internal information/reporting should have a solid foundation on which it is based. This includes the need to define key hierarchies/values and be able to map differences in hierarchies/values where multiple versions exist.

The critical key decision before any MDM implementation is to understand what part MDM will play in the organisation. This may evolve overtime but a very clear scope for MDM needs to be defined. It is assumed that all core data management activity (teams, process and tooling is in place and is scalable).

Related.

See all

Cynozure research calls for stronger data leadership in US financial firms to unlock data benefits

A CLEAR™ Path: How to Foster a Robust Data Culture by Leveraging Concepts from Industrial and Organizational Psychology

Outsmarting the Bots on demand: A practical application of machine learning